Articles

What is a network microburst and how can you detect them?

2 min read

When packets are transmitted from one interface to another, they aren’t necessarily delivered consistently. When a multitasking OS gives CPU time to the network process, it will send as much data as it can in the shortest time. In addition, for each “hop” that data traverses, buffering and other resource bottlenecks inherently make most traffic “bursty”.

However, not all bursts are easily detected. A tool with fine enough granularity is required to see these “microbursts” so they analyzed as the source of latency, jitter, and loss.

What do microbursts look like?

Microbursts occur when a significant number of queued packets are delivered within an extremely short time. Microbursts that are large or frequent enough can cause network buffers to overflow (resulting in packet loss), or cause network processors further down the line to deliver stored packets at odd intervals, causes latency and jitter issues. Seeing an overall throughput that is much less than line rate, or long/inconsistent latency, may be an indicator of too many microbursts going on.

The problem with detecting microbursts in a network trace comes when a tool’s resolution is not small enough to show the bursty nature of the data. Since microbursts occur on the order of microseconds, looking at a graph of overall packets-per-second will make the overall transmission appear smooth.

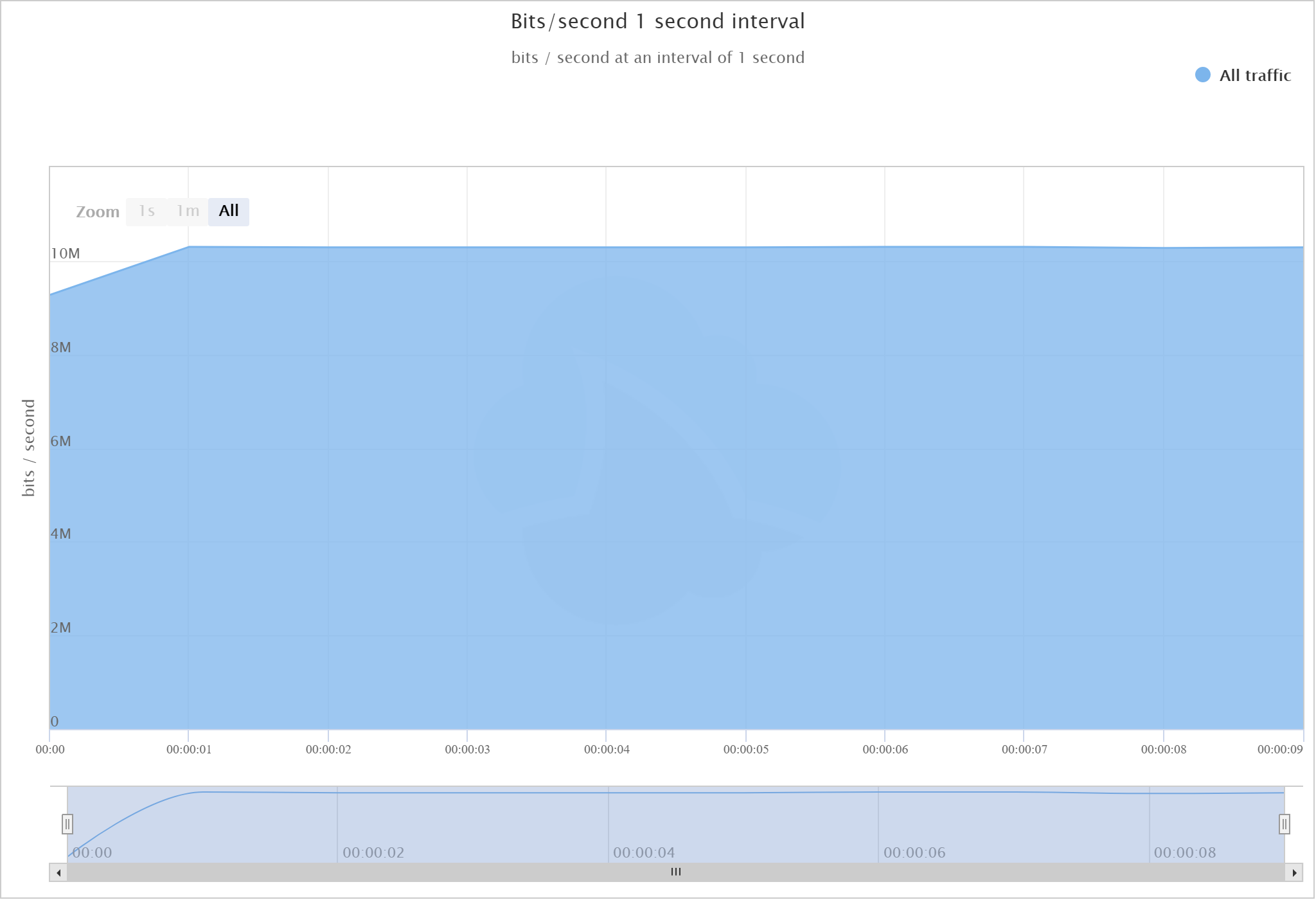

Compare these two graphs for example. The first shows bits-per-second over time with a resolution of one second.

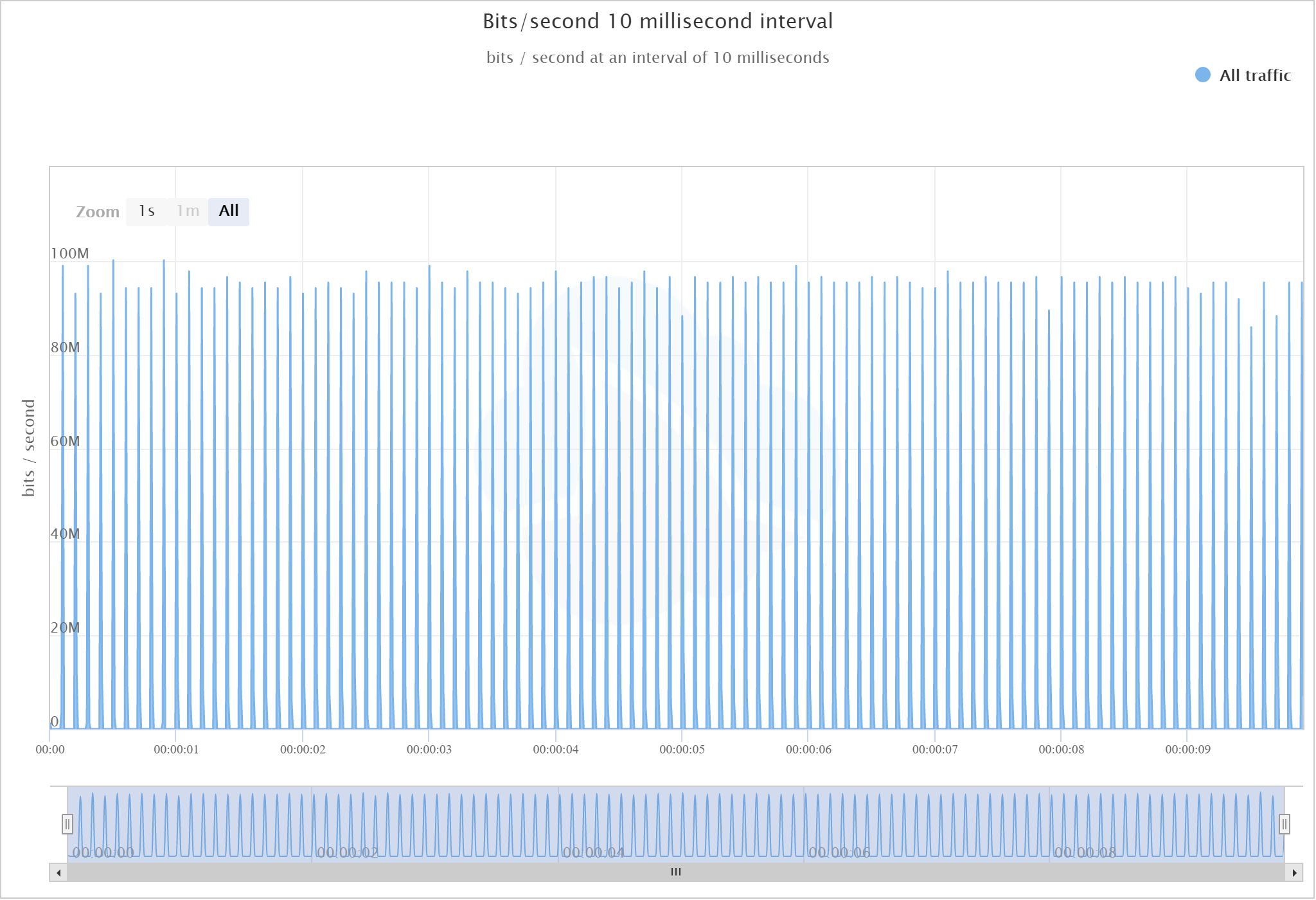

It appears as though the data is being transmitted at a consistent rate around 10 Mbps. CloudShark supports very short time resolutions for graphs, so let’s look at that same capture at a closer resolution:

What is actually being transmitted is bursts of data at 100 Mbps (the data rate of our link, in this case). As you can see, there’s a big difference in the real frequency of those packets!

Why is this a problem?

Microbursts are difficult to detect and can cause all sorts of problems, particularly with applications that require reliable, high-speed, low-latency data transmissions. This training article on microbursts shows how we can use iPerf to simulate these bursts to demonstrate their effects on a network.

Want articles like this delivered right to your inbox?

No spam, just good networking